Data extraction and structurization is a commonly used process for marketers. However, it also requires a great amount of time and effort, and after a few days, the data can change, and all that amount of work will be irrelevant. That’s where web scraping tools come into play.

If you start googling web scraping tools, you will find hundreds of solutions: free and paid options, API and visual web scraping tools, desktop and cloud-based options; for SEO, price scraping, and many more. Such variety can be quite confusing.

We made this guide for the best web scraping tools to help you find what fits your needs best so that you can easily scrape information from any website for your marketing needs.

Quick Links

10 Best Web Scraping Tools for Marketers

Promonavi’s Competitor Analysis

Content Grabber from Sequentum

BrightData (ex Luminati Networks)

What You Should Consider When Choosing a Web Scraping Tool

What Does a Web Scraper Do?

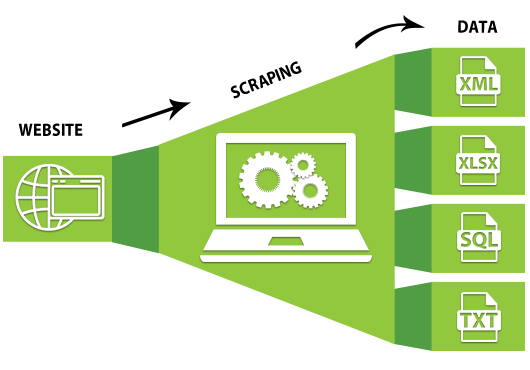

A web scraping tool is software that simplifies the process of data extraction from websites or advertising campaigns. Web scrapers use bots to extract structured data and content: first, they extract the underlying HTML code and then store data in a structured database as a CSC file, an Excel spreadsheet, SQL database, and other formats.

You can use web scraping tools in many ways; for example:

- Perform keyword and PPC research.

- Analyze your competitors for SEO purposes.

- Collect competitors’ prices and special offers.

- Crawl social trends (mentions and hashtags).

- Extract emails from online business directories, for example, Yelp.

- Collect companies’ information.

- Scrape retailer websites for the best prices and discounts.

- Scrape jobs postings.

There are dozens of other ways of implementing web scraping features, but let’s focus on how marketers can profit from automated data collection.

Web Scraping for Marketers

Web scraping can supercharge your marketing tactics in many ways, from finding leads to analyzing how people react to your brand on social media. Here are some ideas on how you can use these tools.

Web scraping for lead generation

If you need to extend your lead portfolio, you may want to contact people who fit your customer profile. For example, if you sell software for real estate agents, you need those agents’ email addresses and phone numbers. Of course, you can browse websites and collect their details manually, or you can save time and scrape them with a tool.

A web scraper can automatically collect the information you need: name, phone number, website, email, location, city, zip code, etc. We recommend starting scraping with Yelp and Yellowpages.

You can further qualify those leads by filtering the data by keywords (for example, city) to find your exact personas. This means you not only get some leads but you will get qualified leads.

Now, you can build your email and phone lists to contact your prospects.

Web scraping for market research

With web scraping tools, you can scrape valuable data about your industry or market.

For example, you can scrape data from marketplaces such as Amazon and collect valuable information, including product and delivery details, pricing, review scores, and more.

Using this data, you can generate insights into positioning and advertising your products effectively.

For example, if you sell smartphones, scrape data from a smartphone reseller catalog to develop your pricing, shipment conditions, etc. Additionally, by analyzing consumers’ reviews, you can understand how to position your products and your business in general.

Web scraping for competitor research

You may browse through your competitors’ websites and gather information manually, but what if there are dozens of them that each have hundreds or thousands of web pages? Web scraping will save you a lot of time, and with regular scraping, you will always be up-to-date.

You can regularly scrape entire websites, including product catalogs, pricing, reviews, blog posts, and more, to make sure you are riding the wave.

Web scraping can be incredibly useful for PPC marketers to get an insight into competitors’ advertising activities. You can scrape competitors’ Search, Image, Display, and HTML ads. You’ll get all of the URLs, headlines, texts, images, country, popularity, and more in just a few minutes.

Web scraping for knowing your audience

Knowing what your audience thinks and what they talk about is priceless. That’s how you can understand their issues, values, and desires to create new ideas and develop existing products.

Web scraping tools can help here too. For example, you can scrape trending topics, hashtags, location, and personal profiles of your followers to get more information about your ideal customer personas, including their interests and what they care and talk about. You may also create a profile network to market to specific audience segments.

Web scraping for SEO

Web scraping is widely used for SEO purposes. Here are some ideas about what you can do:

- Analyze robots.txt sitemap.xml.

- Track the page ranks over time by scraping various SERPs for given keywords.

- Check titles and descriptions on the site pages, analyze their length, collect titles of every level (H1-H6).

- Check image Alt attributes.

- Check page response codes.

- Analyze internal linking and backlinks.

- Find broken links.

- Scrape competitors’ URLs, headlines, keywords, and Meta Tags.

- And much more.

Blog content scraping

If you are into content marketing, you know how important it is to keep an eye on competitors’ blogs. However, by no means would we recommend you to scrape somebody’s content to steal it. Instead, use it to develop your own ideas.

That’s how you can implement this method: Scrape your competitors’ URLs, blog titles, and Meta Tags to understand which topics you should work on to attract qualified traffic.

Web scraping for sentiment analysis

Scrape social media for hashtags and mentions of your products, brand, and location to get an insight into customers’ sentiments. You can search and filter messages by keywords to sort posts by theme, emotion, specific product, location, etc.

Scraping Twitter data

Do you know what influencers in your industry are talking about? Then, scrape and analyze their tweets to find the answer.

You may also analyze the most successful tweets from your competitors to understand what resonates with your audience.

Scraping Reddit data

If you need to explore what communities are talking about, go to Reddit. Here, you can find topics with a high volume of upvotes or downvotes and look for your competitors’ activities in subreddits.

Types of Web Scraping Tools

Now, let’s see what kind of web scraping tools you can find to solve all of the marketing tasks we’ve discussed above.

Desktop and cloud-based web scrapers

Cloud-Based Web Scrapers

The main advantage of cloud-based web scrapers is that you don’t need to download and install anything on your desktop. All the work is done in the cloud, and you only download the results to your computer. Such parsers may have a web interface and/or API (especially helpful if you want to automate data parsing and do it regularly).

Here are some examples of cloud-based web scrapers:

- Import.io,

- Mozenda (a desktop version is also available),

- Octoparce (a desktop version is also available),

- ParseHub.

You can test any of the services listed above for free. However, this is only enough to evaluate the basic features and get acquainted with the functionality. In addition, the free version has restrictions, either on the amount of data parsing or the time of using the service (mostly limited to 14 days).

Web Scraping Tools for Desktop

Most desktop-based parsing tools are designed for Windows. However, you may run them on macOS from virtual machines. Also, some scrapers have portable versions — you can run them from a USB flash drive or an external drive.

As we have already mentioned, such popular services as Mozenda and Octoparce have both cloud and desktop-based versions. Another example of very successful desktop-based crawlers is the SEO Spider from Screaming Frog.

Web scraping tools by technology

Browser extensions for web scraping

There are several browser extensions that collect the necessary data from the source code of pages and allow you to save it in a convenient format (for example, in XML or XLSX).

Such extensions are a good option if you need to collect small amounts of data (from one or a couple of pages). Here are some popular scrapers for Google Chrome:

Add-Ins for Excel

Marketers can also use the software in the form of an add-in for Microsoft Excel for web scraping. For example, the Scrape HTML Add-In. Such scrapers use macros, and the results are immediately uploaded to XLS or CSV.

Google Sheets

Using two simple formulas and Google Sheets, you can scrape data from any website for free.

These formulas are IMPORTXML and IMPORTHTML.

- IMPORTXML

The function uses the XPath query language and allows parsing data from XML feeds, HTML pages, and other sources.

This is how the function looks like:

IMPORTXML("https://site.com/catalog"; "//a/@href")

The function takes two values:

- A link to the page or feed from which you want to get data.

- An XPath request (a special request that specifies which data element needs to be parsed).

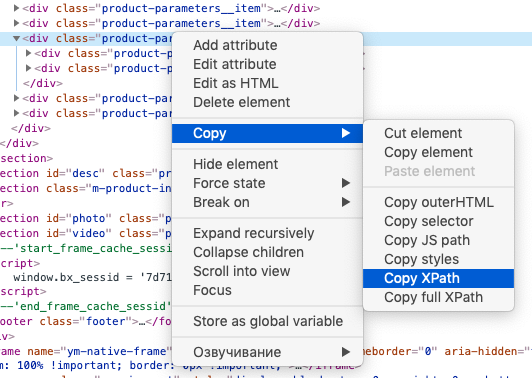

The good news is that you don’t have to learn the syntax of XPath queries. To get an XPath request for an element with data, you need to open the developer tools in the browser, right-click on the desired part and select: Copy → Copy XPath.

With IMPORTXML, you can collect almost any data from HTML pages: headers, descriptions, meta tags, prices, etc.

- IMPORTHTML

This function has fewer features — you can use it to collect data from tables or lists on a page. Here is an example of the IMPORTHTML function:

IMPORTHTML("https://https://site.com/catalog/chocolate"; "table"; 3)

It takes three values:

- A link to the page from which you need to collect data.

- The parameter of the element that contains the necessary data. If you want to collect information from a table, specify “table.” For parsing lists — the “list” parameter.

- The number is the number of the element in the page code.

10 Best Web Scraping Tools for Marketers

Now, let’s talk about specific tools that can help with your marketing activities.

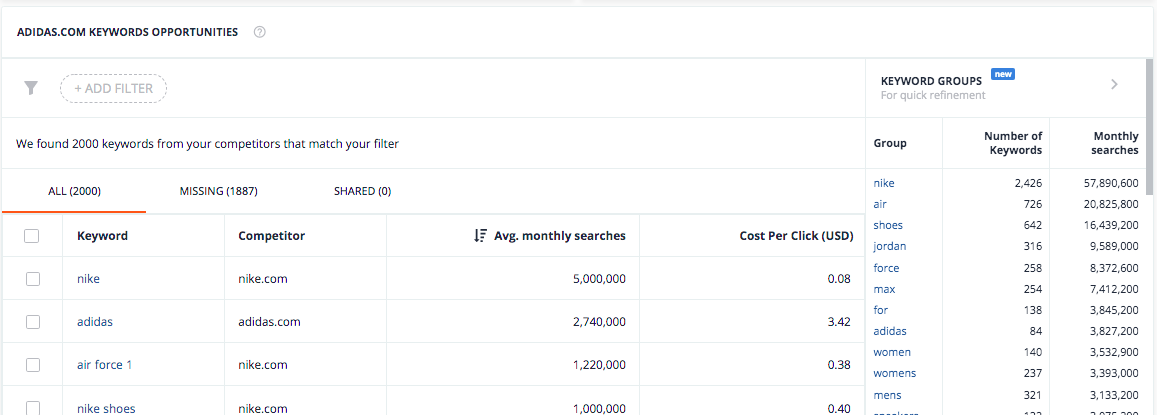

Promonavi’s Competitor Analysis

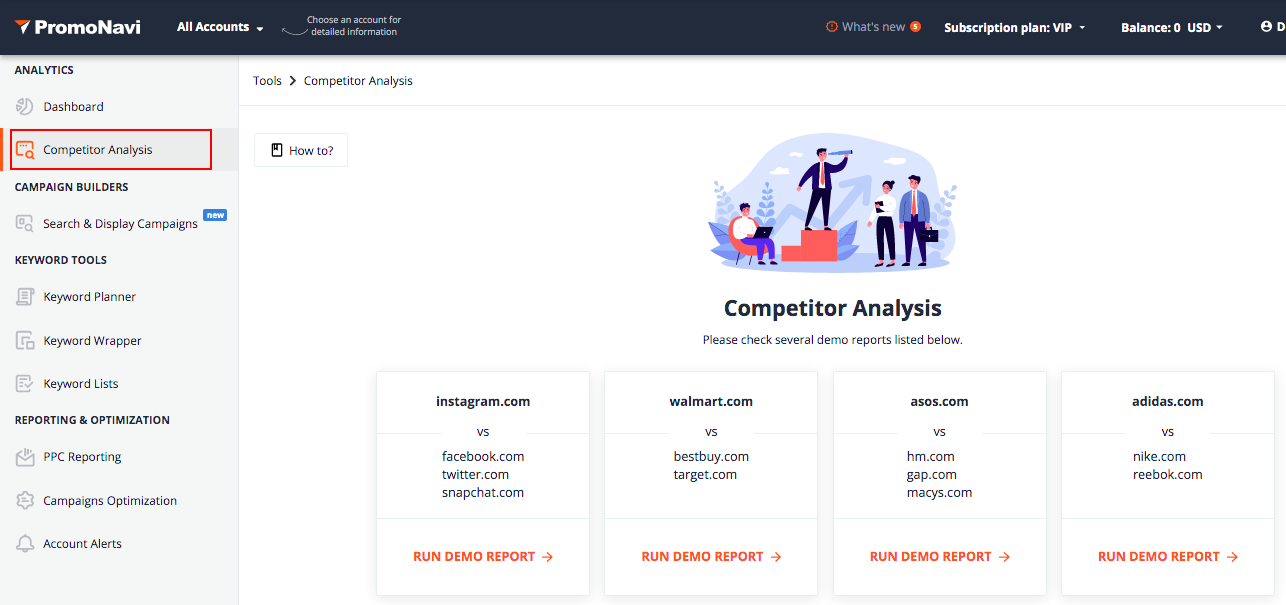

The Competitor Analysis tool is included in PromoNavi’s basic pricing plan, and you can navigate it from the main menu on the left.

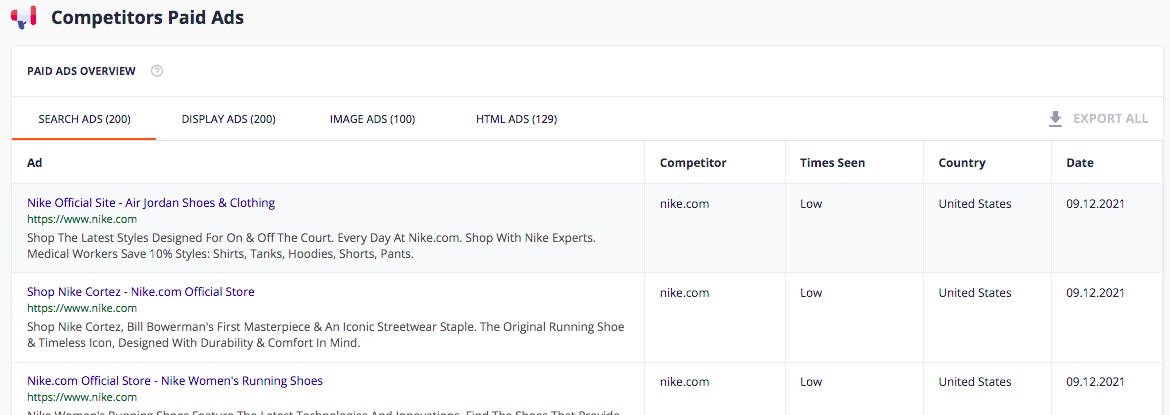

In the Competitors Paid Ads section, you will find Google Ads from your competitors for a period of time, grouped into categories Search Ads, Display Ads, Image Ads, and HTML Ads. PromoNavi scrapes not only ad content (including images) but also their popularity, country, and date.

Also, you will find the exact keywords your competitors are using in their advertising.

Consider these data as your keywords opportunity. You can even add keywords or keyword groups to your Google Ads account from the same interface or just save them.

Pricing: The Competitor Analysis feature is included in the Promonavi subscription, starting from $49 monthly. You can start with a 14-day free trial.

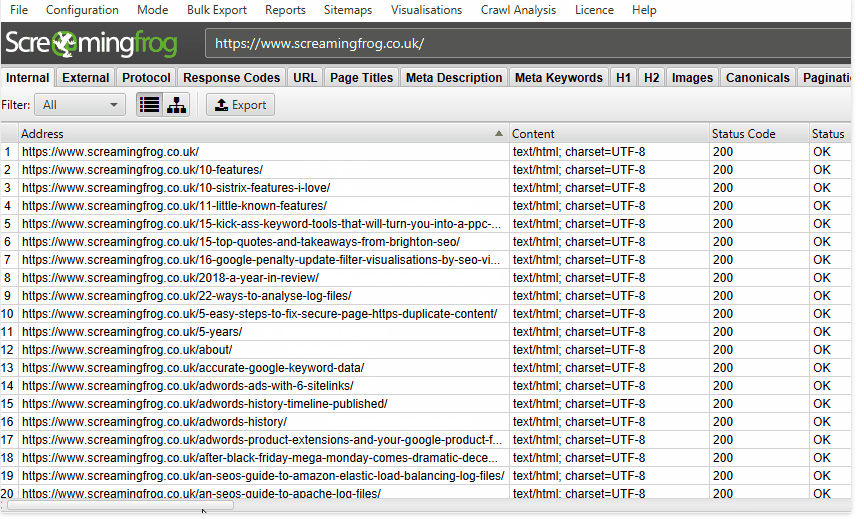

Screaming Frog SEO Spider

SEO Spider from Screaming Frog is a multifunctional desktop-based website crawler for a wide range of SEO tasks:

- Find broken links

- Analyze page Titles & Meta Data

- Audit redirects

- Discover duplicate content

- Review robots & directives

- Extract data with XPath

- Generate XML sitemaps

- Visualise site architecture

- And many others

Pricing: £149.00 per year; a limited free version is available (for up to 500 URLs).

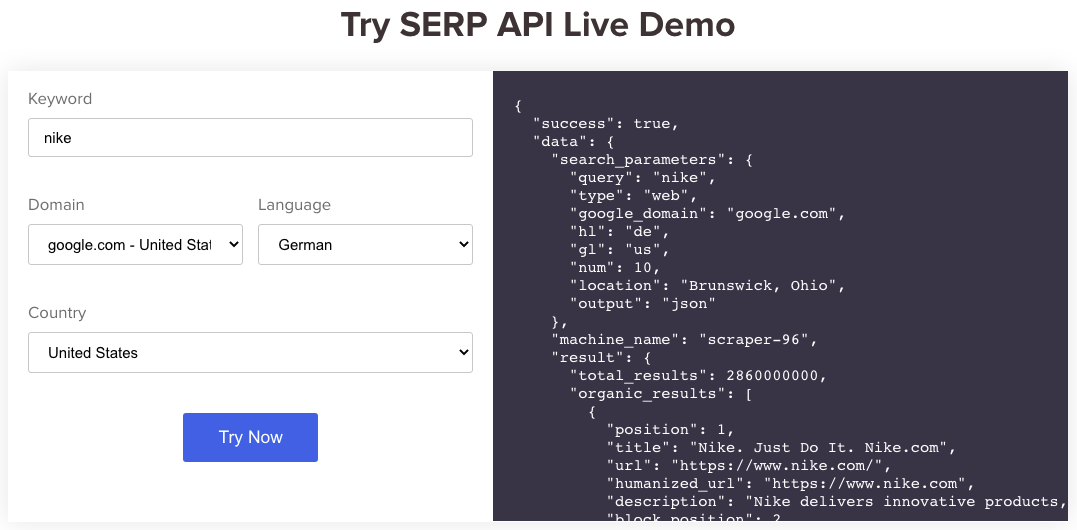

AvesAPI

AvesAPI is one more tool for SEO experts. The tool uses its own infrastructure to scrape Top-100 results in real-time.

AvesAPI has a sharp focus on SERP; however, you can’t use it for broader web scraping.

Here are the main features of this pinpoint scraping tool:

- Get Top-100 results from any location and language

- Perform Geo-specific search for local results

- Collect ads and organic results with all SERP features

- Get real-time data in JSON or HTML

Pricing: free for 1,000 searches; paid plans starting from $50 for 25,000 searches.

Parsehub

Parsehub is a web scraping tool for all kinds of tasks. It can be helpful not only for marketers but also for developers, sales leads, journalists, analysts, and others who work with data online.

Here are some features of Parsehub:

- Extracts text, HTML, and attributes

- Scrapes and downloads images/files

- Scrapes data hidden behind a log-in

- Scrolls pages, uses pagination and navigation

- Searches through forms and inputs

- Collects data from tables and maps

- Navigates between websites

- Downloads CSV and JSON files

- And much more

Pricing: from $149 monthly for the Standard version; a free plan is also available.

50+ PPC Automation Tools [The Most Comprehensive Compilation]

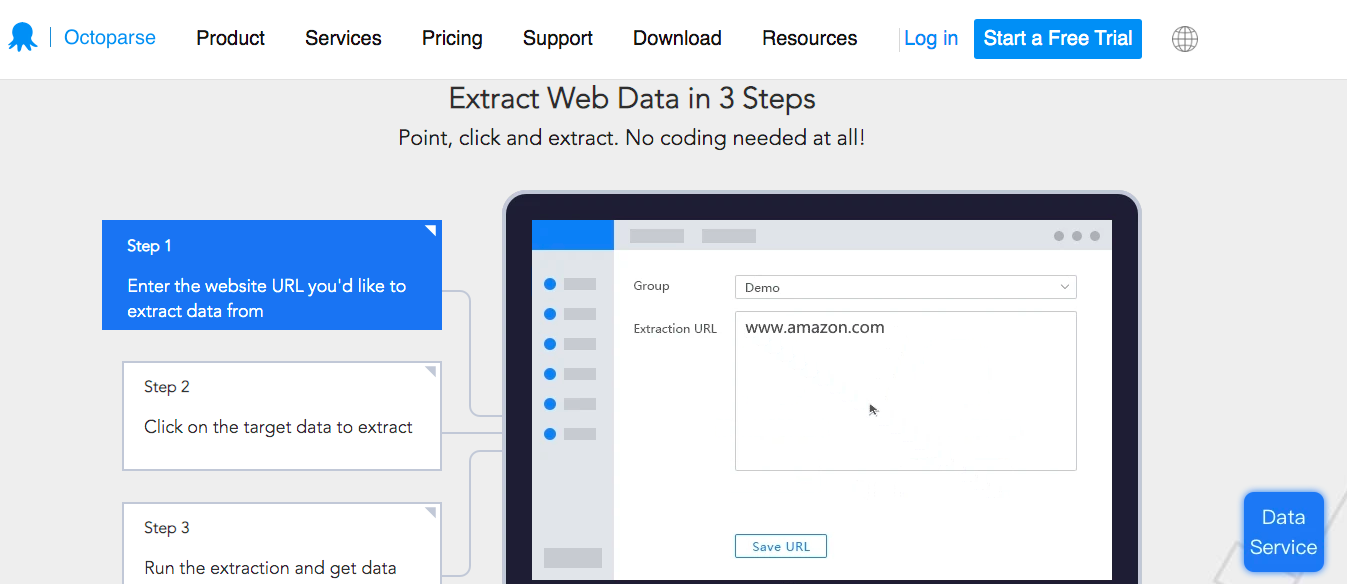

Octoparse

Octoparse is an easy-to-use visual web scraping tool that requires no coding skills. With the free account, you can scrape up to 10,000 records. All you need to do is enter the website URL and the data you want to download, and the extraction will start.

Here are some features of the service:

- Scrapes behind forms and logins.

- Extracts AJAX supplied data.

- Ad Blocking optimizes tasks by reducing loading time.

- Uses Proxy to hide IP addresses.

- Many data export formats: CSV, Excel, XML, MySQL, SQLite, SQL Server, OleDB, and Oracle.

Pricing: free for up to 10,000 exports per record; other plans start from $75 per month.

Import.io

Import.io does more than just scraping and organizing unstructured data. Using this tool, you can also:

- Systemize the data with 100+ spreadsheet-like formulas;

- Visualize data with custom reports;

- Integrate to your business systems with their API.

Pricing: custom.

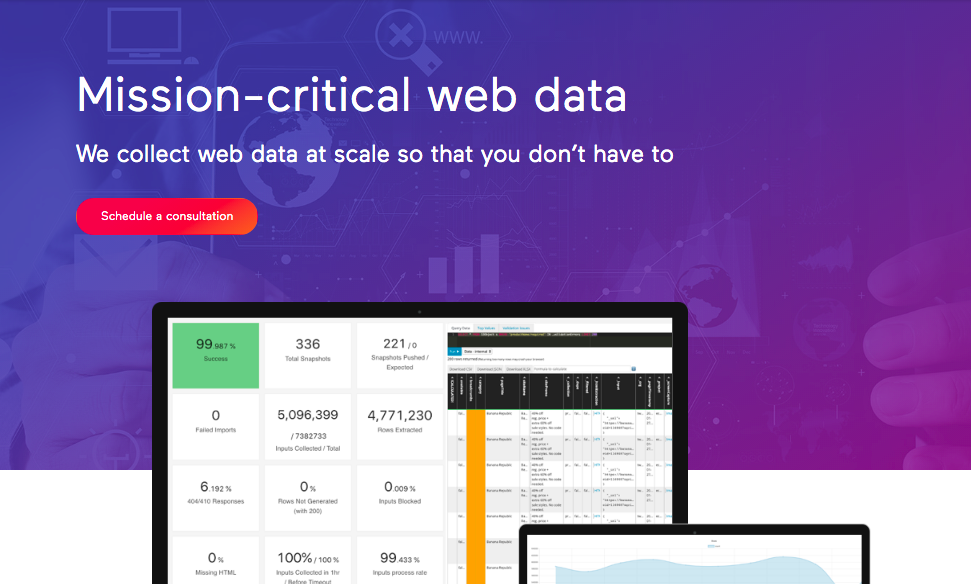

Content Grabber from Sequentum

Content Grabber has many advanced features, but it is more suitable for users with programming skills since it offers scripting editing. For example, you can use C# or VB.NET to write regular expressions or generate matching expressions using the built-in Regex tool.

Pricing: custom.

BrightData (ex Luminati Networks)

BrightData is an open-source web scraper for data extraction.

You can profit from the following features:

- Search engine crawler

- Proxy API

- Data unblocker

- No-code, open-source proxy management

- Browser extension

Pricing: There are two pricing categories: Pay as you go (from $5.00/1,000 page loads) and Subscriptions starting from $315 monthly.

Mozenda

Mozenda is a cloud-based web scraping solution with many useful features. As a result, brands like Tesla, Marriott, CNN, Bank of America, and many others trust Mozenda when it comes to scraping data.

Mozenda not only helps you collect data but also offers further services such as data wrangling — from raw data to clean information.

Pricing: custom.

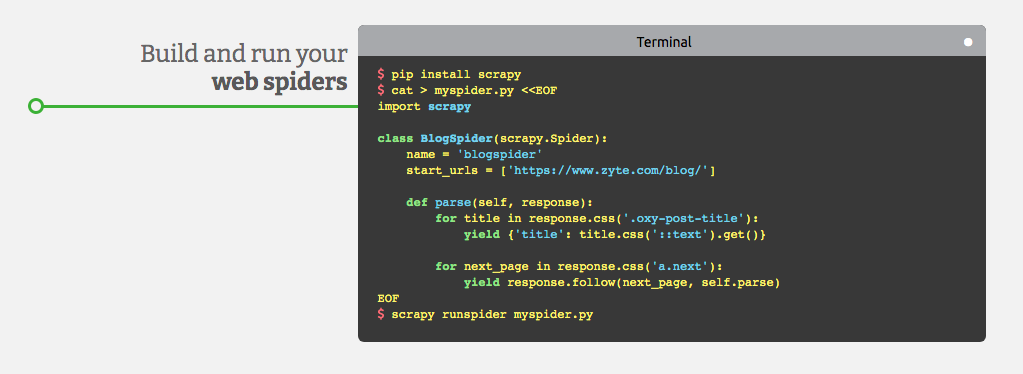

Scrapy is a free, open-source web-crawling framework written in Python for developers who want to build scalable web crawlers. You can fully customize your crawler for your needs, but, again, it requires advanced coding skills.

Scrappy runs on Linux, Windows, Mac, and BSD.

Pricing: free.

What You Should Consider When Choosing a Web Scraping Tool

- Ease of use. Much depends on your coding skills. If you are a non-coder, choose visual scrapers that require no special skills. Web scraping tools should save time and make your life easier, not more complicated. If you have a specific task, such as PPC competitors scraping, think of tools created especially for this purpose and solve your task with a few clicks.

- Functionalities offered. Again, think of the marketing tasks you want to solve with web scraping. Make a list of your requirements and compare it with the tools’ features. Sometimes you should pay for one tool where all of the functionalities are included, and sometimes it can be more advantageous to pay for 1-3 specific easy-to-use tools for particular tasks.

- Scalability. Think of how your requirements can change while your business develops and if the web scraping tool is flexible enough to meet your growing needs.

- Transparent pricing. Read what is written in small print. Sometimes only the basic functionality is included in the package, and there are extra costs for additional services.

- Performance and crawling speed. Performance and speed can have immense importance if you work for an agency and need to scrape for many projects.

- Data formats supported. Look for formats that the scrappers support. Most of them present scrapped data as .CSV, .TXT, .Excel. Some more sophisticated web scrapers can present data in other formats such as JSON, MySQL, and others.

- Handling anti-scraping mechanisms. Some websites can have protection against scraping mechanisms. Look for tools that can handle them.

- Customer support. Read reviews about customer support quality. It’s crucial to have qualified support for the service you use.

Now, when you are aware of these factors, create a shortlist of parsers for your tasks. Next, test their demo versions (or the free trial) to understand which service better fits your needs. Finally, contact the support team with a few questions to know how the service really works.

Sometimes you may see that the free version is enough for your tasks, or maybe it’s a one-time task you can solve during the free trial.

We hope this guide was helpful for you, and now you are armed with more powerful tools for your marketing success.

Do you want to automate and stay in control over your Google, Microsoft, and Facebook Ads campaigns? Try PromoNavi, a powerful all-in-one PPC toolkit that is suitable for keyword research, competitor analysis, performance monitoring, reporting, and many other tasks. You can get started with a free 14-day trial right now!